Deep Learning with Solid Models

The objects around us, chairs, cars, devices etc., are almost always designed as solid models in CAD, but surprisingly few deep learning approaches have been used to date. Making it difficult to explore how learning based methods might enhance future CAD tools with features such as auto-complete or interpolation between CAD models. Part of the challenge has been the availability of suitable data, and part teaching these algorithms to speak CAD. We tackled both of these challenges over the course of several collaborations between Autodesk Research and MIT, Brown Universty, and UCL, with results published at CVPR and SIGGRAPH.

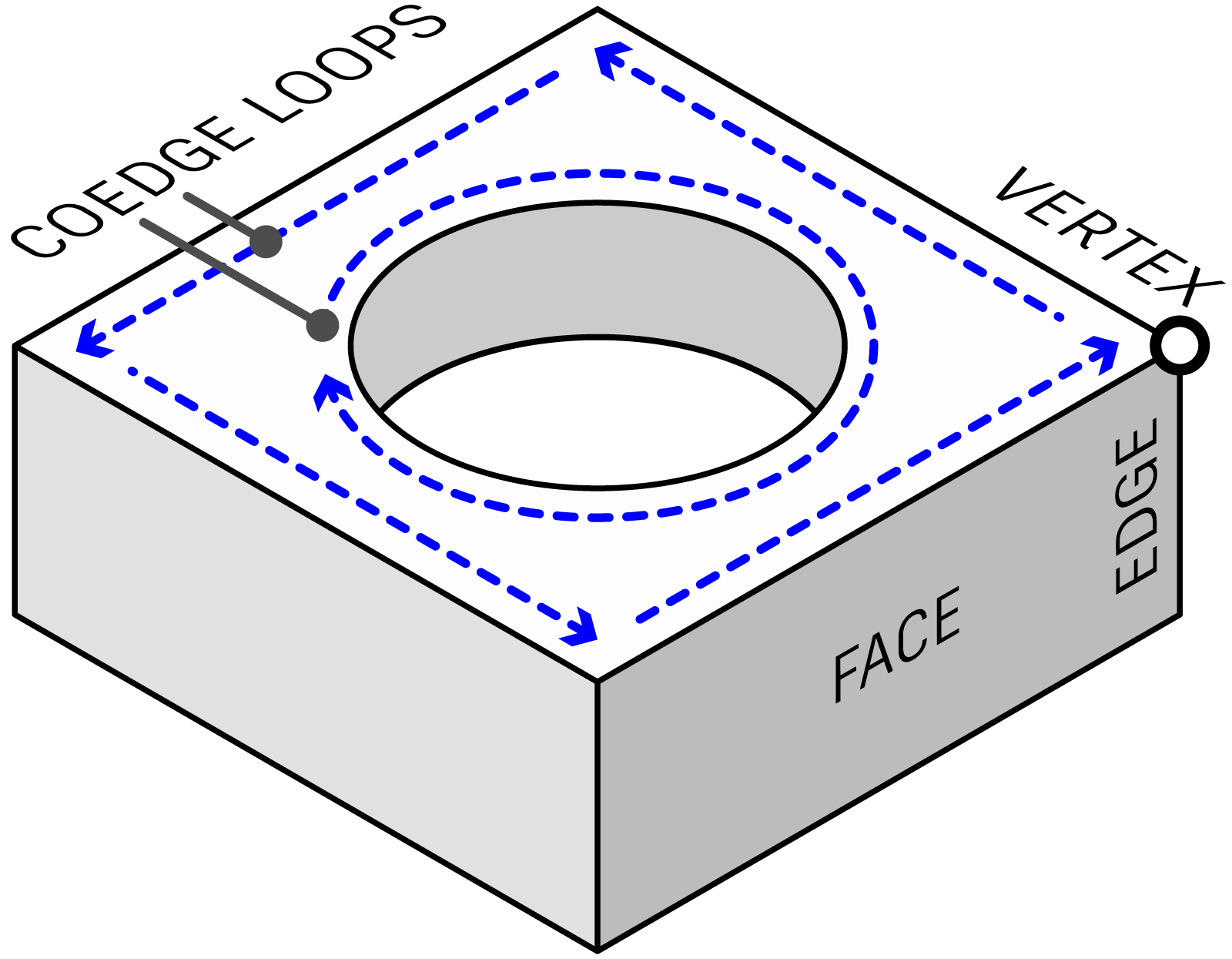

Solid models use the boundary representation, or B-Rep. Think of a B-Rep as a watertight mesh where the faces don’t need to be flat and can be trimmed to any shape. These faces are ‘glued’ together, with the topology forming a well structured graph.

In UV-Net (Paper, Code) we use this graph with a GNN and sample a point grid on each face for use with a CNN. This combination outperforms mesh and point cloud methods on both classification and segmentation.

In BRepNet (Paper, Code) we show that convolutional kernels can be applied with respect to oriented coedges to outperform both network and heuristic methods on segmentation.

Along with BRepNet we also release a new segmentation dataset with over 35k CAD models in B-Rep, mesh, and point cloud representations.

So if you have access to solid model data, it can really help with classification and segmentation. But what about other tasks? One long sought after goal in CAD is to reverse engineer a CAD model when the modeling history is not available. We tackle this problem with a new representation, the Zone Graph (Paper, Code), where each zone is a solid region formed by extending all B-Rep faces and partitioning space with them.

Finally, in a CVPR workshop paper we explore how we might synthesize solid models by first generating plausible engineering sketches using transformers (Paper).

Created By: Autodesk Research / MIT / Brown University / UCL

Co-Authors: Pradeep Kumar Jayaraman, Joe Lambourne, Xianghao Xu, Wenzhe Peng, Aditya Sanghi, Yewen Pu, Hang Chu, Tao Du, Wojciech Matusik, Hooman Shayani, Armando Solar-Lezama, Daniel Ritchie, Thomas Davies, Nigel Morris, Peter Meltzer, Jieliang (Rodger) Luo, Chin-Yi Cheng