JoinABLe

Ever wondered how physical objects with hundreds of parts get assembled in CAD? It’s a tedious process that we automate by learning how pair of parts connect to form joints.

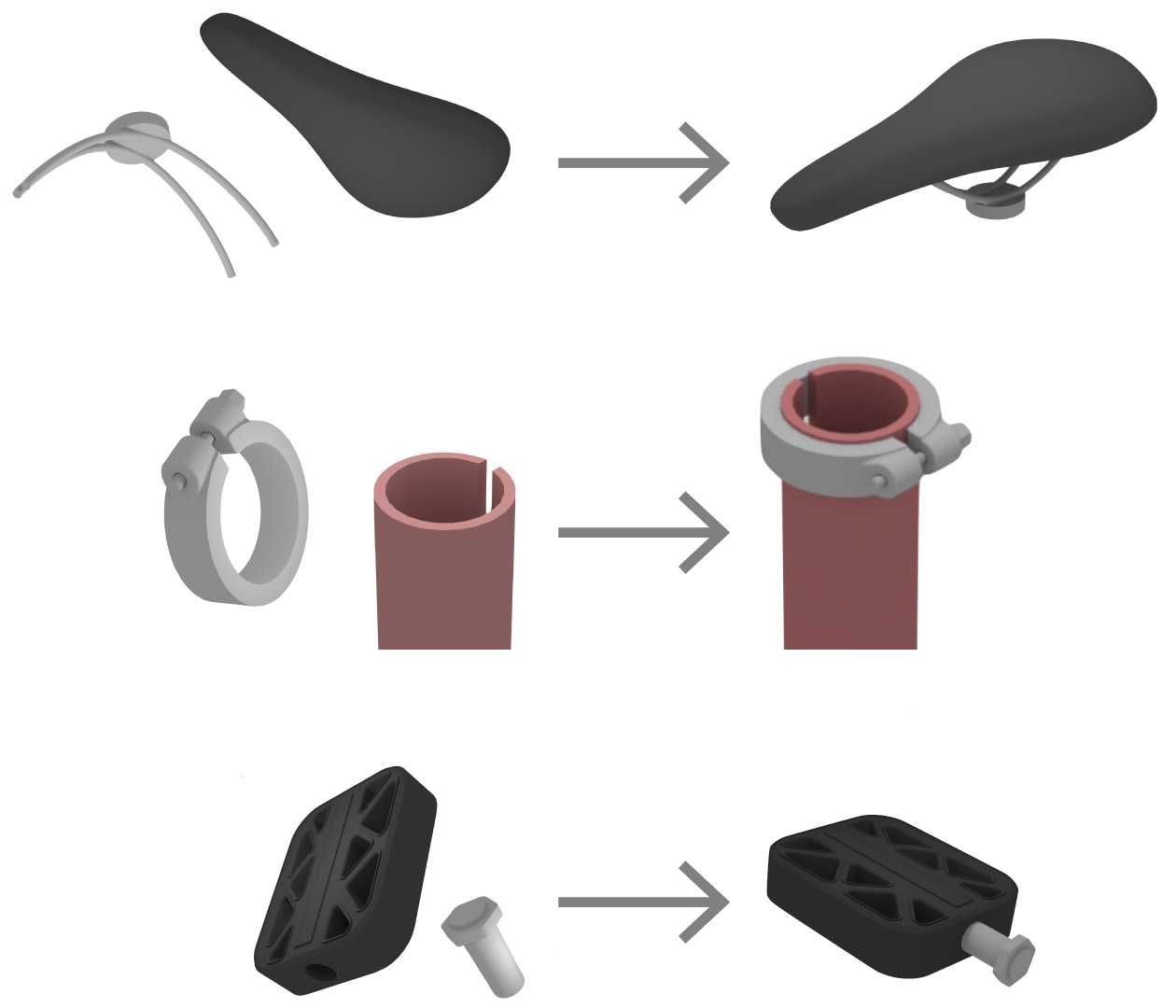

So what does joint setup look like in CAD today? Designers manually pick edges or faces on each part and a joint is formed that constrains the two parts to one another. Once constrained the parts move as one.

However, doing it over and over again is time consuming and error prone...

What if we could automatically create a joint to assemble a pair of parts just by looking at their shape? Could we do this without any object category labels or human guidance? Here’s how we tackle it...

We take a bottom up approach to learn how parts connect locally. We frame the problem as a categorical one and make direct predictions on a solid model's discrete set of faces/edges. We represent each part as a graph and predict a single joint connection between the two parts.

The predicted faces/edges give us a joint axis to align the two parts and the final pose can be recovered using a neurally guided search.

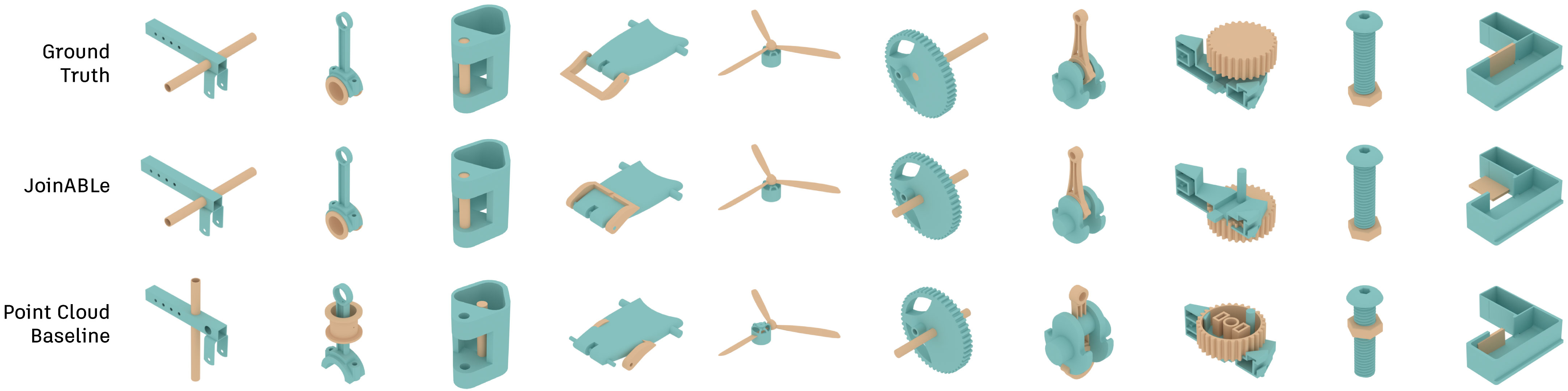

JoinABLe outperforms all of the other methods we compared against and is within 0.5% of human performance on the joint axis prediction task. The image below shows how JoinABLe assembles pairs of parts in a more realistic way than a point cloud baseline.

We release our code along with a new dataset of multi-part CAD assemblies containing rich information on joints, contact surfaces, holes, and the underlying assembly graph structure.

Paper: JoinABLe: Learning Bottom-up Assembly of Parametric CAD Joints (CVPR 2022)

Code: https://github.com/AutodeskAILab/JoinABLe

Dataset: https://github.com/AutodeskAILab/Fusion360GalleryDataset

Created By: Autodesk Research / MIT

Co-Authors: Pradeep Kumar Jayaraman, Hang Chu, Yunsheng Tian, Yifei Li, Daniele Grandi, Aditya Sanghi, Linh Tran, Joe Lambourne, Wojciech Matusik, Armando Solar-Lezama.