Sequence-based CAD Generative Models

How can machine learning represent and generate 2D sketches and 3D mechanical parts in ways that are structured, controllable, and useful for design workflows? Together with collaborators from Simon Fraser University, we developed a pair of generative models for CAD aimed at learning how humans design:

SkexGen, a model that disentangles topology and geometry into separate codebooks for generating sketches and extruded parts.

Hierarchical Neural Coding (HNC), an extension of this work that introduces a multi-level codebook structure, enabling even more flexible and hierarchical control over generation and completion.

These models open up new possibilities for AI-assisted CAD, allowing for partial edits, hybrid designs, and interactive generation grounded in the patterns of real-world design data.

Learning From Human Design Sequences

Parametric CAD modeling relies on sketch and extrude operations to define 3D shapes. These sketches are typically defined with constraints and dimensions that support quick edits and design variations. However, setting up constraints is time-consuming and requires expert knowledge.

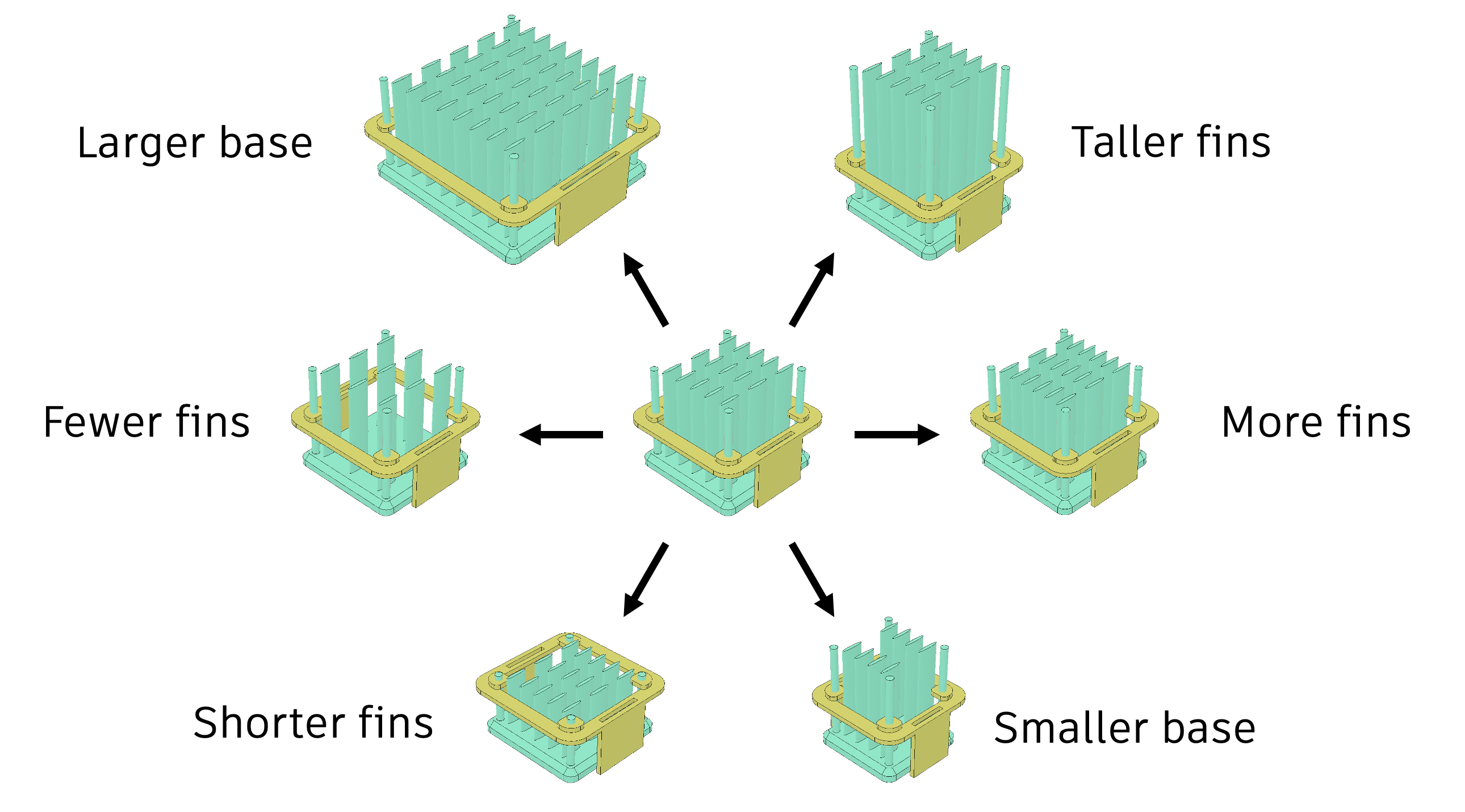

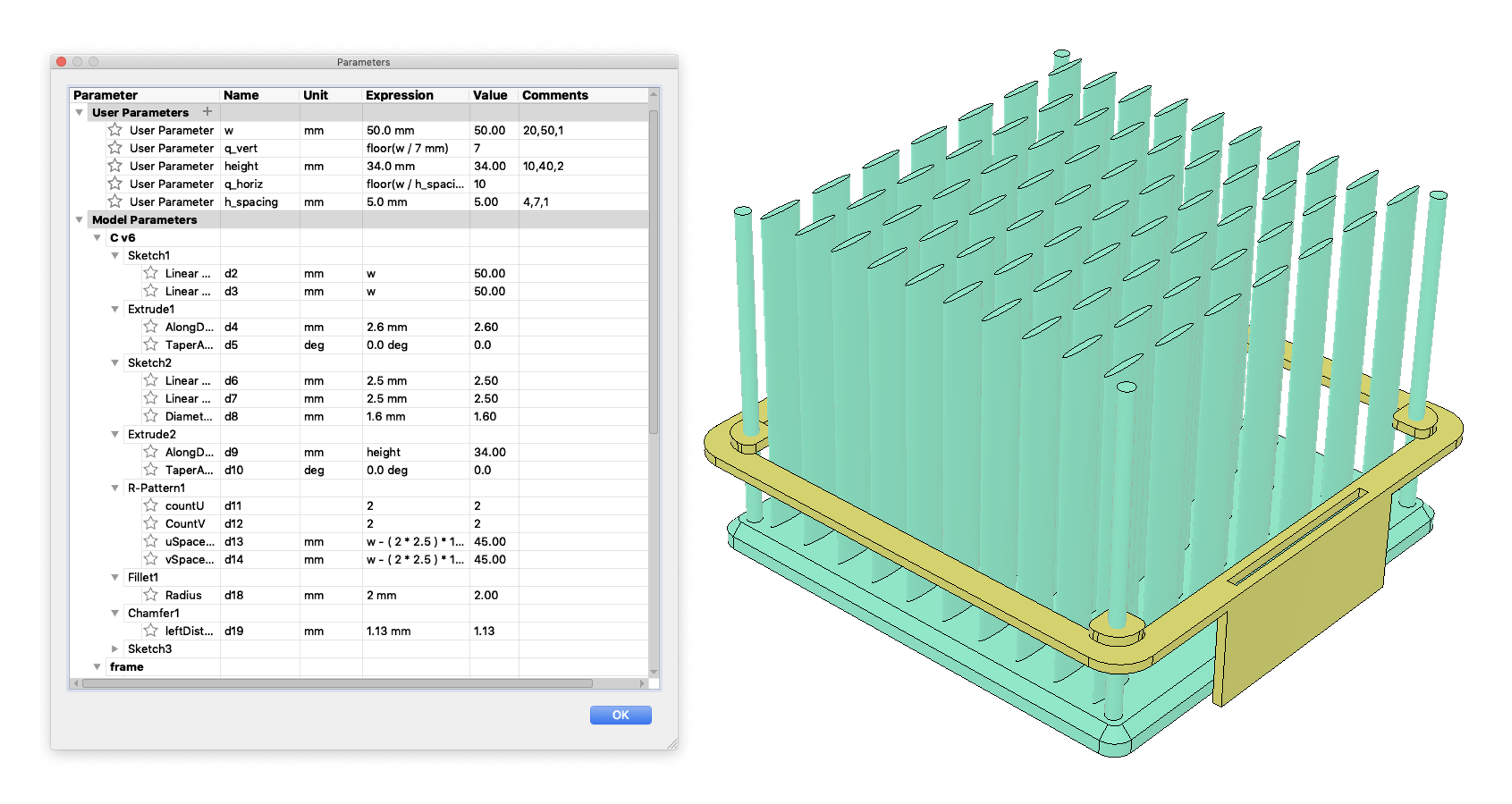

Take the example of a heat sink design. Parametric constraints can be used to drive the number of fins, spacing between fins, and the overall body shape. This allows the design to be updated quickly and consistently across the model.

However, defining such constraints is a manual process and requires a deep understanding of the underlying geometry. Here are the parameters driving the heatsink design, it's a job for an expert.

What if we could learn from how people design and use that to generate new, editable designs automatically? What if we could capture the patterns and structure embedded in real-world sketches and parts and use that as the basis for fast, controllable design generation?

SkexGen (SKetch and EXtrude GENerative model)

SkexGen tackles this problem by firstly separating out the topology from geometry in our representation, so they can be controlled individually downstream.

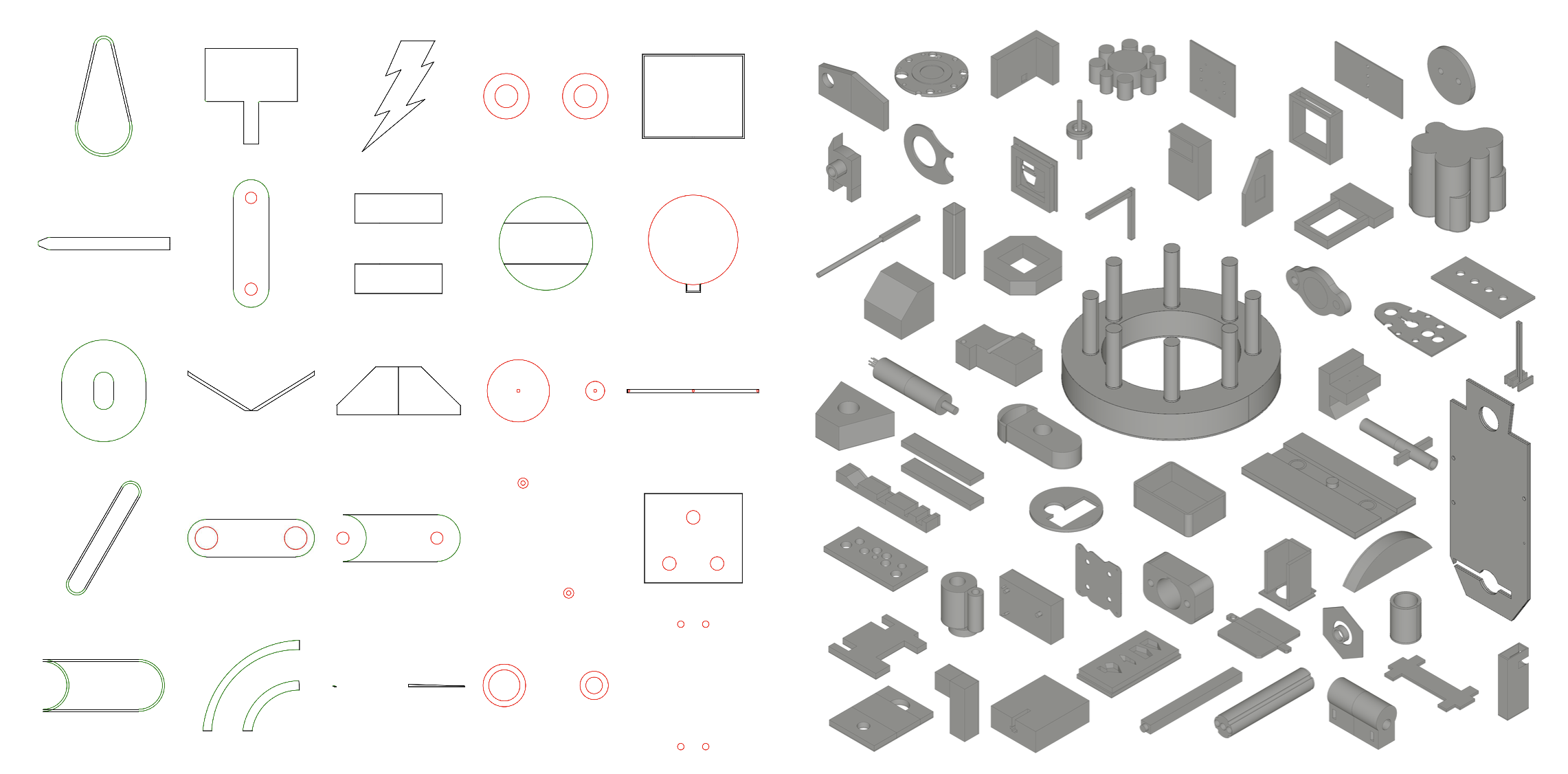

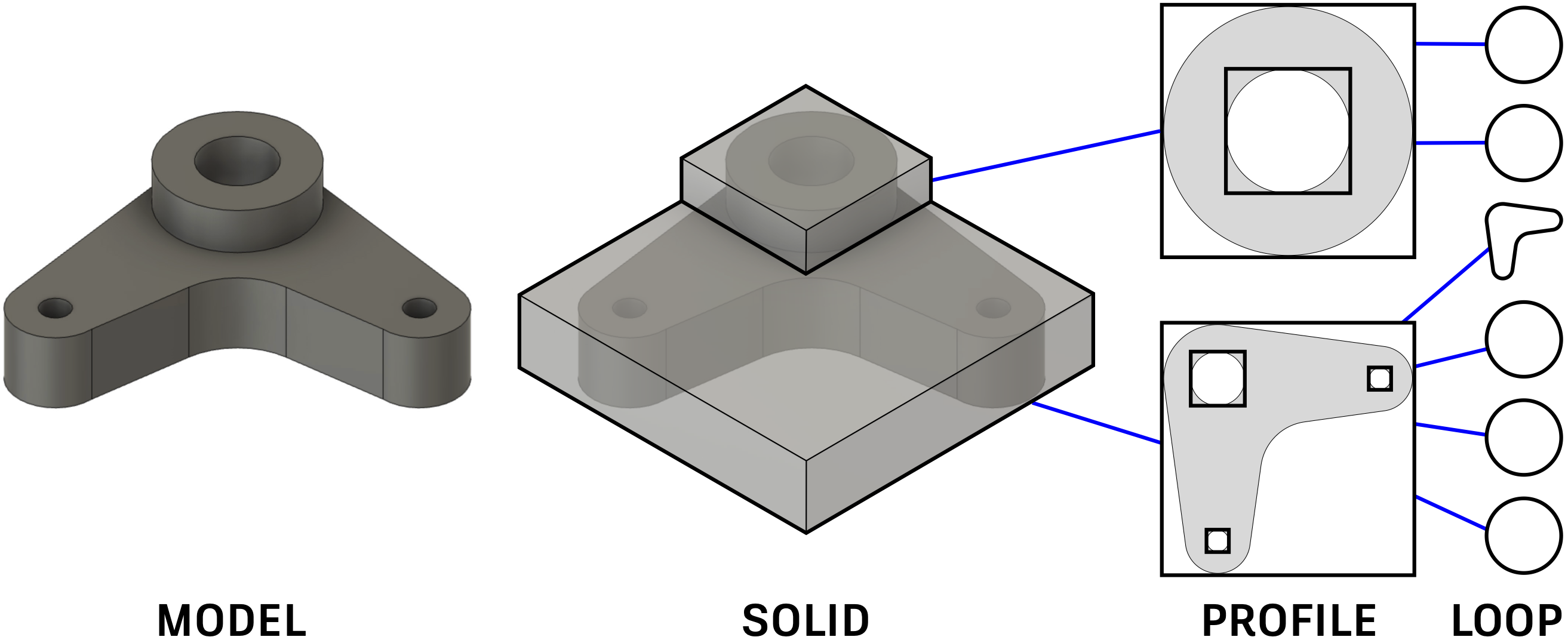

As with prior work we generate a sequence of tokens based on the hierarchy of curves, loops, faces, and extrusions found in sketch and extrude construction sequences.

Here is where it gets interesting, these tokens are fed into separate Transformer encoders to encode the topology, geometry, and extrude information into separate quantized codebooks. A separate sketch and extrude decoder is used to generate a CAD construction sequence.

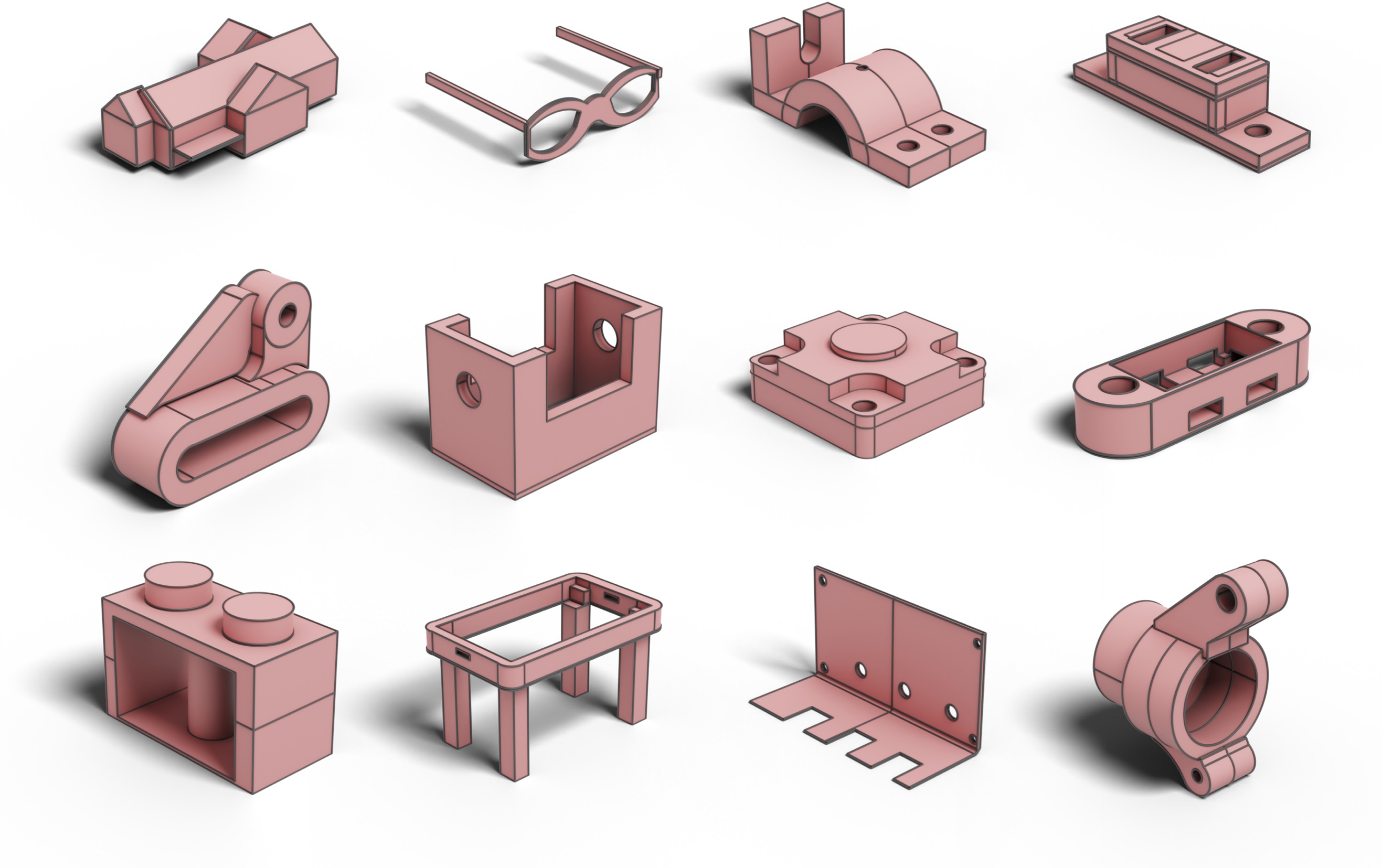

The same process can be used to generate 3D parts. At inference time, we can sample from the codebooks and decode them into sketches or parts.

The disentangled structure allows us to generate and manipulate designs in meaningful ways. When we generate 2D sketches or 3D parts we can use the same code in any of the codebooks to produce results that are similar in either topology, geometry, or extrusion parameters. Here we keep the topology code the same so the designs have similar overall structure.

Code mixing can also turn 😀 into 😐.

Hierarchical Neural Coding for Controllable CAD Model Generation

While SkexGen separates design elements into discrete codebooks, our follow-up work, Hierarchical Neural Coding (HNC), takes this further by introducing structure tied to the hierachy of a CAD model.

As shown above, a sketch and extrude CAD model is naturally hierarchical, consisting of several nested entities: loops define individual 2D contours, profiles represent closed regions composed of one outer loop and potentially multiple inner loops, solids correspond to extruded profiles, and the complete model is composed of one or more solids. Our goal is to enable both local and global control in the generation process, where users can edit any of these entities, whether a loop, profile, solid, or the full model, and have the rest of the design update automatically in a coherent way.

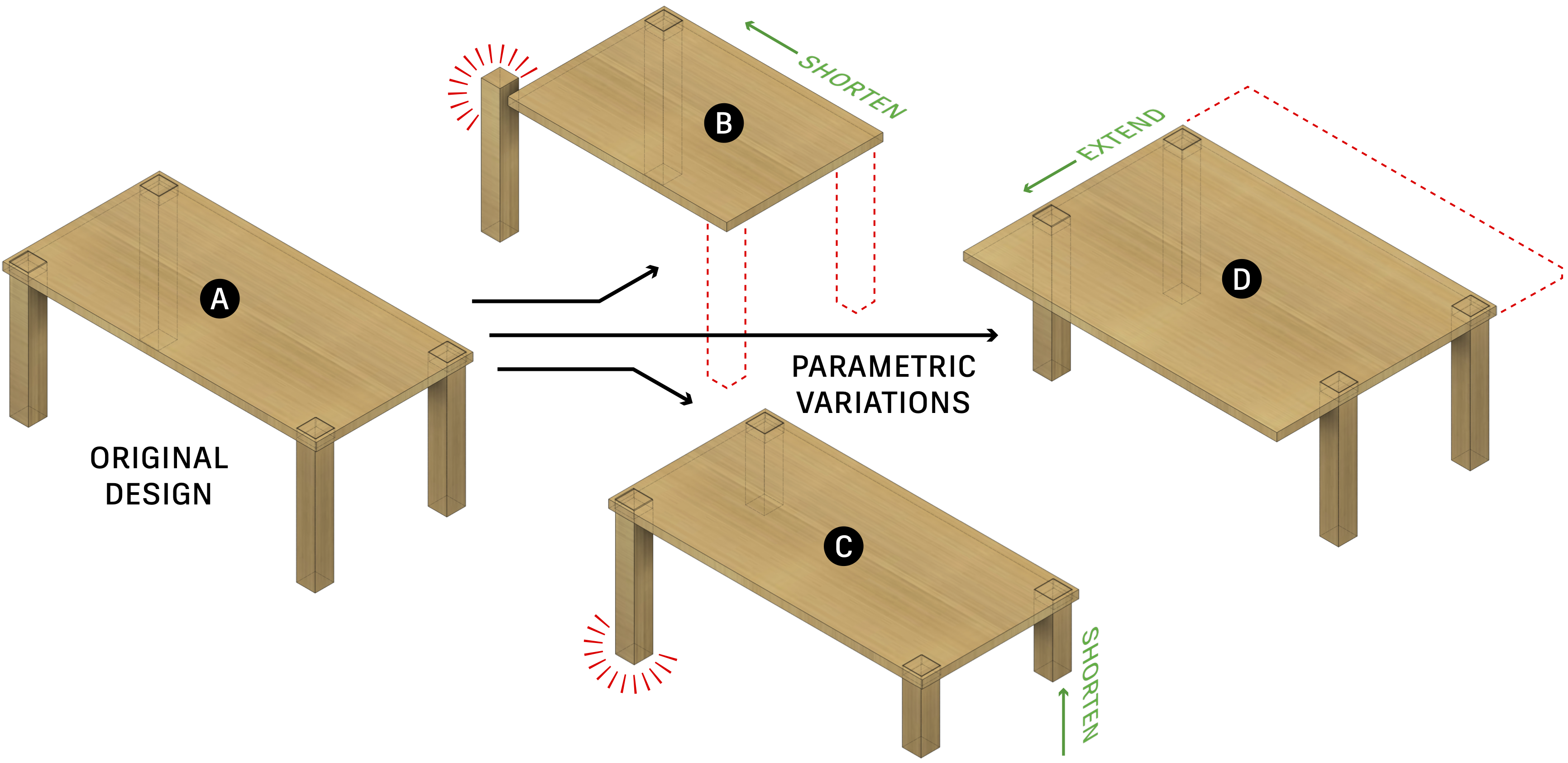

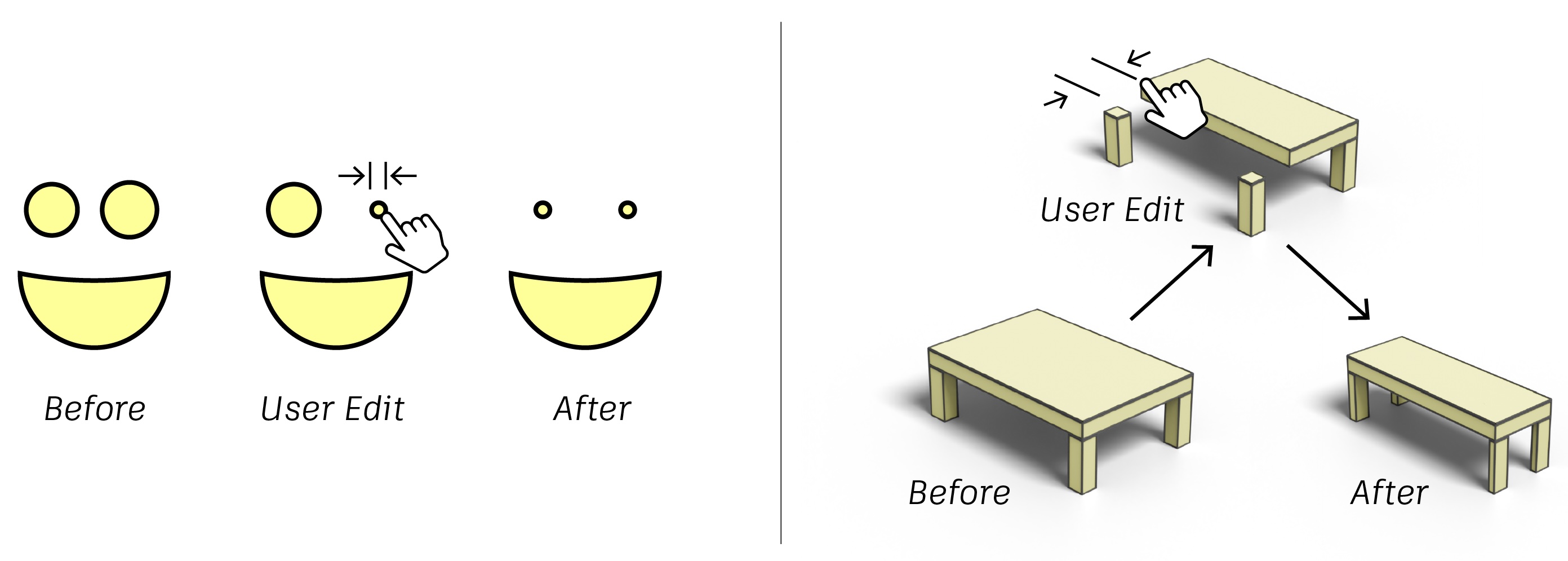

Unfortunately this is not the case today, parametric CAD requires specialized skills and can easily fail when constraints are missing or incomplete. For example, simple edits to the table design above, such as changing leg length or tabletop size, can break the original intent, resulting in misaligned or distorted geometry. These issues highlight the limitations of current tools and the need for ML models that can better preserve design intent.

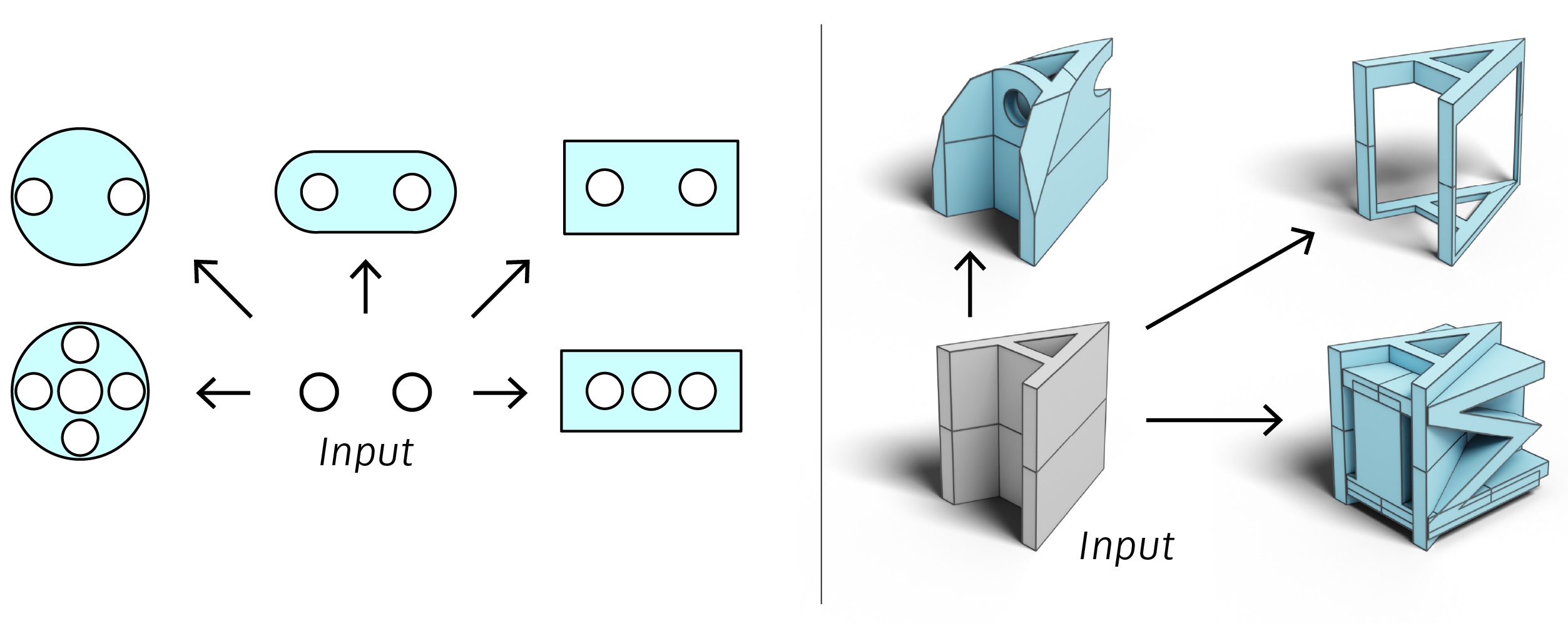

Our HNC generative model captures CAD design intent as a three-level tree of neural codes, spanning local geometry to global part structure. This representation enables generation and completion of CAD models based on partial inputs, directly specified code trees, or unconditional generation (shown above). The model learns these hierarchical codebooks using a novel VQ-VAE with masked skip connections, producing clean, interpretable representations learned from a large dataset of sketch-and-extrude models. A two-stage transformer then maps between geometry and code trees, allowing flexible, controllable generation. The final designs are outputed as modeling operations and converted into a standard B-Rep format for use in CAD tools.

The figure above illustrates how user edits can be automatically propagated across the design to maintain consistent structure and preserve design intent. Whether modifying the spacing of facial features in a sketch (left) or adjusting the dimensions of a table design (right), the model updates the rest of the geometry in a coherent way.

The figure above demonstrates the model’s ability to autocomplete partial geometry into complete, realistic designs. Given an incomplete sketch (left) or part (right), the model generates multiple coherent completions, each reflecting plausible design intent.

Reimagining Design Tools

Together, SkexGen and HNC reflect our ongoing effort to learn expressive and controllable representations for CAD design. With SkexGen, we introduced a disentangled codebook model that separates topology, geometry, and extrusion. With HNC, we take this further, learning hierarchical code structures that reflect how designs are composed and allowing more granular control over the generative process. Our aim is to build tools that support the creative intent of designers. Tools that don’t replace human input, but amplify it by making the design space more navigable, editable, and interactive.

SkexGen Paper: SkexGen: Autoregressive Generation of CAD Construction Sequences with Disentangled Codebooks (ICML 2022)

SkexGen Code: https://github.com/samxuxiang/SkexGen

HNC Paper: Hierarchical Neural Coding for Controllable CAD Model Generation

HNC Code: https://github.com/samxuxiang/hnc-cad

Created By: SFU / Autodesk Research

Co-Authors: Xiang Xu (Lead author), Joe Lambourne, Chin-Yi Cheng, Pradeep Kumar Jayaraman, Yasutaka Furukawa